DPDK

DPDK is a set of libraries designed for high-performance packet processing in user space, bypassing the kernel's networking stack to achieve low-latency and high-throughput networking.

In DPDK, packets are directly received from the NIC1 using poll mode drivers, processed in user space, and then send out, all without kernel intervention, which reduces latency and eliminates context switches. In contrast, the traditional kernel stack involves receiving packets through interrupt handling, where the kernel processes them through its networking stack, the TCP/IP stack2, with potential context switching between user space and kernel.

Kernel-based networking system

NIC receives a packet → Interrupts the CPU → Kernel processes packet

-

NIC: Receives data packets from the network.

-

CPU Interrupt: The NIC generates an interrupt to notify the CPU that data is available for processing.

-

Driver: The operating system’s network driver responds to the interrupt and processes the packet, moving it into kernel memory.

-

Networking Stack: The data is handed off to the kernel's networking stack for further processing (e.g., routing, protocol handling).

DPDK

NIC receives a packet → DPDK polls the NIC directly -> DPDK application processes packet

-

NIC: The NIC still receives data packets from the network, but instead of generating interrupts, it remains passive.

-

Polling (No Interrupt): DPDK uses a poll mode driver (PMD), where the CPU actively polls the NIC to check if new packets are available. There are no interrupts to trigger packet processing.

-

User Space (DPDK Application): The packets are directly transferred to user space without going through the kernel’s networking stack. DPDK libraries manage the packet buffers, and the application processes them directly in user space.

-

Networking Stack: DPDK bypasses the kernel's traditional networking stack entirely. If kernel networking functionality is needed (e.g., routing), DPDK can use KNI (Kernel Network Interface)3 to interact with the kernel. Otherwise, all packet processing happens in user space for performance gains.

Info

In DPDK, packets are stored in memory buffers (rte_mbuf) and processed in user

space.

Setup

VM

WSL probably won't help since it lacks direct hardware access, kernel pypass, and hugepage4 support. I'm running Ubuntu 18.04.67 in VMware Workstation Pro8 and will set up DPDK 19.089.

Info

There are two network adapters: one will be unbound from the kernel and bound to DPDK, while the other will be used for everything else. Bridged can NAT can both work.

VM configuration

Info

Quit VMWare Workstation before editing the .vmx file, then restart it.

The e1000 virtual NIC does not support multi-queue functionality, which limits its

performance in DPDK applications. DPDK relies on NICs that support multi-queue or RSS

(Receive Side Scaling), allowing packets to be distributed across multiple CPU cores

for parallel processing. We have 8 CPUs for the VM and will have 8 receive queues

(rx_queues) for DPDK. Wake on Packet Receive can be useful in some use cases, but

it's not essential. We also enabled keyboard.vusb to reduce keyboard lag.

Info

If you experience issues where you have trouble keeping input focus inside the VM, it could be related to how VMware handles input grabbing and input ungrabbing. Make sure they are set to "Normal".

Dev environment

Essential Packages

# ifconfig

sudo apt install net-tools

# vim

sudo apt install vim

# gcc

sudo apt install gcc

# make

sudo apt install make

# dpdk dependency NUMA

sudo apt install libnuma-dev

# python

# some DPDK scripts rely on python

sudo apt install python

# pkg-config

# some DPDK Makefiles uses pkg-config

sudo apt install pkg-config

Visual Studio Code

# https://code.visualstudio.com/docs/setup/linux#_install-vs-code-on-linux

sudo snap install --classic code # or code-insiders

Git

# set up local git client

sudo apt install git

git config --global user.name "tianb2"

git config --global user.email "[email protected]"

# set up ssh

# either directly or with gh

# https://github.com/cli/cli/blob/trunk/docs/install_linux.md

gh auth login

Network interfaces

We will bind the ens160 interface to DPDK, which is configured to use the vmxnet3

and has 8 receive queues.

tianb@ubuntu:~/networks-d$ ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.238.136 netmask 255.255.255.0 broadcast 192.168.238.255

inet6 fe80::ea08:6cee:823c:4deb prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:33:d5:d9 txqueuelen 1000 (Ethernet)

RX packets 465443 bytes 694675688 (694.6 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2556 bytes 414816 (414.8 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens160: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.238.135 netmask 255.255.255.0 broadcast 192.168.238.255

inet6 fe80::3ec:13a3:1f2c:74f6 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:33:d5:cf txqueuelen 1000 (Ethernet)

RX packets 119157 bytes 166775204 (166.7 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 37507 bytes 3431719 (3.4 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 1222 bytes 139919 (139.9 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1222 bytes 139919 (139.9 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

tianb@ubuntu:~/networks-d$ cat /proc/interrupts | grep ens33

19: 0 26639 0 0 0 0 0 71 IO-APIC 19-fasteoi ens33

tianb@ubuntu:~/networks-d$ cat /proc/interrupts | grep ens160

56: 0 0 195 18 16228 2 430 0 PCI-MSI 1572864-edge ens160-rxtx-0

57: 0 0 0 0 0 0 0 13864 PCI-MSI 1572865-edge ens160-rxtx-1

58: 4586 0 0 0 0 0 0 0 PCI-MSI 1572866-edge ens160-rxtx-2

59: 0 19 15 0 0 1045 328 993 PCI-MSI 1572867-edge ens160-rxtx-3

60: 0 0 13 22 0 0 467 299 PCI-MSI 1572868-edge ens160-rxtx-4

61: 0 0 0 392 25 1405 0 2 PCI-MSI 1572869-edge ens160-rxtx-5

62: 0 0 2 876 579 7046 22 2245 PCI-MSI 1572870-edge ens160-rxtx-6

63: 220 0 0 421 0 302 6 239 PCI-MSI 1572871-edge ens160-rxtx-7

64: 0 0 0 0 0 0 0 0 PCI-MSI 1572872-edge ens160-event-8

DPDK

Enable Hugepage

# If yoat u change this file, run 'update-grub' afterwards to update

# /boot/grub/grub.cfg.

# For full documentation of the options in this file, see:

# info -f grub -n 'Simple configuration'

GRUB_DEFAULT=0

GRUB_TIMEOUT_STYLE=hidden

GRUB_TIMEOUT=0

GRUB_DISTRIBUTOR=`lsb_release -i -s 2> /dev/null || echo Debian`

- GRUB_CMDLINE_LINUX_DEFAULT="quiet"

+ GRUB_CMDLINE_LINUX_DEFAULT="quiet default_hugepagesz=1G hugepagesz=2M hugepages=1024"

GRUB_CMDLINE_LINUX="find_preseed=/preseed.cfg auto noprompt priority=critical locale=en_US"

# Uncomment to enable BadRAM filtering, modify to suit your needs

# This works with Linux (no patch required) and with any kernel that obtains

# the memory map information from GRUB (GNU Mach, kernel of FreeBSD ...)

#GRUB_BADRAM="0x01234567,0xfefefefe,0x89abcdef,0xefefefef"

# Uncomment to disable graphical terminal (grub-pc only)

#GRUB_TERMINAL=console

# The resolution used on graphical terminal

# note that you can use only modes which your graphic card supports via VBE

# you can see them in real GRUB with the command `vbeinfo'

#GRUB_GFXMODE=640x480

# Uncomment if you don't want GRUB to pass "root=UUID=xxx" parameter to Linux

#GRUB_DISABLE_LINUX_UUID=true

# Uncomment to disable generation of recovery mode menu entries

#GRUB_DISABLE_RECOVERY="true"

# Uncomment to get a beep at grub start

#GRUB_INIT_TUNE="480 440 1"

Compile DPDK from source

# download DPDK 19.08.2 from https://core.dpdk.org/download/

# unpack it somewhere. for me its ~/share/dpdk

cd ~/share/dpdk/dpdk-stable-stable-19.08.2

# build

make config T=x86_64-native-linux-gcc

make

# install

sudo make install DESTDIR=/usr/local T=x86_64-native-linux-gcc

x86_64-native-linux-gcc

linux and linuxapp are build targets of DPDK. If you will modify DPDK

source code, it's recommended to use linux. native means that you are

compiling the code for the local architecture and platform, without the

need for cross-compilation. x86_64 refers to a 64-bit architecture, often

called AMD64 or Intel 64, depending on the processor manufacturer.

Disable the interface for DPDK

We will bind ens160 to DPDK. Before doing so, we need to bring the interface down

to ensure it's no longer managed by the kernel networking stack. After this, it won't

appear in ifconfig.

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.238.136 netmask 255.255.255.0 broadcast 192.168.238.255

inet6 fe80::ea08:6cee:823c:4deb prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:33:d5:d9 txqueuelen 1000 (Ethernet)

RX packets 492351 bytes 730617776 (730.6 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 5125 bytes 793880 (793.8 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 1691 bytes 205683 (205.6 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1691 bytes 205683 (205.6 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

Setup DPDK

# switch to the root user

sudo su

# set up RTE_SDK and RTE_TARGET

echo 'export RTE_SDK=/home/tianb/share/dpdk/dpdk-stable-19.08.2/' >> ~/.bashrc

echo 'export RTE_TARGET=x86_64-native-linux-gcc' >> ~/.bashrc

source ~/.bashrc

# ~/share/dpdk/dpdk-stable-stable-19.08.2

./usertools/dpdk-setup.sh

ERROR: Target does not have the DPDK UIO Kernel Module.

If you see the error message, it typically indicates that RTE_SDK or

RTE_TARGET environment variables are not set correctly for the root user.

----------------------------------------------------------

Step 2: Setup linux environment

----------------------------------------------------------

[43] Insert IGB UIO module

[44] Insert VFIO module

[45] Insert KNI module

[46] Setup hugepage mappings for non-NUMA systems

[47] Setup hugepage mappings for NUMA systems

[48] Display current Ethernet/Baseband/Crypto device settings

[49] Bind Ethernet/Baseband/Crypto device to IGB UIO module

[50] Bind Ethernet/Baseband/Crypto device to VFIO module

[51] Setup VFIO permissions

We need to execute options 43, 44, 45, 46, 47, and 49, then exit with option 60.

- Option 43: Enter

- Option 44: Enter

- Option 45: Enter

- Option 46: Enter 512

- Option 47: Enter 512

- Option 49: Choose the PCI address corresponding to the 'VMXNET3 Ethernet

Controller', which in my case is

0000:03:00.0. This corresponds to theens160interface that was previously unbound from the kernel. This step binds the NIC to DPDK.

Option: 43

Unloading any existing DPDK UIO module

Loading uio module

Loading DPDK UIO module

Option: 44

Unloading any existing VFIO module

Loading VFIO module

chmod /dev/vfio

OK

Option: 45

Unloading any existing DPDK KNI module

Loading DPDK KNI module

Option: 46

Removing currently reserved hugepages

Unmounting /mnt/huge and removing directory

Input the number of 2048kB hugepages

Example: to have 128MB of hugepages available in a 2MB huge page system,

enter '64' to reserve 64 * 2MB pages

Number of pages: 512

Reserving hugepages

Creating /mnt/huge and mounting as hugetlbfs

Option: 47

Removing currently reserved hugepages

Unmounting /mnt/huge and removing directory

Input the number of 2048kB hugepages for each node

Example: to have 128MB of hugepages available per node in a 2MB huge page system,

enter '64' to reserve 64 * 2MB pages on each node

Number of pages for node0: 512

Reserving hugepages

Creating /mnt/huge and mounting as hugetlbfs

Option: 49

Network devices using kernel driver

===================================

0000:02:01.0 '82545EM Gigabit Ethernet Controller (Copper) 100f' if=ens33 drv=e1000 unused=igb_uio,vfio-pci *Active*

0000:03:00.0 'VMXNET3 Ethernet Controller 07b0' if=ens160 drv=vmxnet3 unused=igb_uio,vfio-pci

No 'Baseband' devices detected

==============================

No 'Crypto' devices detected

============================

No 'Eventdev' devices detected

==============================

No 'Mempool' devices detected

=============================

No 'Compress' devices detected

==============================

No 'Misc (rawdev)' devices detected

===================================

Enter PCI address of device to bind to IGB UIO driver: 0000:03:00.0

OK

[60] Exit Script

Option: 60

Note

The configuration is not persistent, so you may need to run these options again after rebooting the VM.

Info

There are also options in dpdk-setup.sh, such as option 39, which compiles

and installs DPDK. However, it reports a missing DESTDIR, so I just built it

directly using make.

Testing

A quick script to verify whether everything is set up correctly.

#include <stdio.h>

#include <rte_eal.h>

int main(int argc, char **argv) {

// init DPDK environment

if (rte_eal_init(argc, argv) < 0) {

// the newline character '\n' is important for the message

// to be properly flushed and showup in stderr

rte_exit(EXIT_FAILURE, "eal init failed\n");

}

printf("hello dpdk\n");

}

# Refer to DPDK examples in the source directory

# binary name

APP = hellodpdk

# all source are stored in SRCS-y

SRCS-y := main.c

# Build using pkg-config variables if possible

ifeq ($(shell pkg-config --exists libdpdk && echo 0),0)

all: shared

.PHONY: shared static

shared: build/$(APP)-shared

ln -sf $(APP)-shared build/$(APP)

static: build/$(APP)-static

ln -sf $(APP)-static build/$(APP)

PKGCONF=pkg-config --define-prefix

PC_FILE := $(shell $(PKGCONF) --path libdpdk)

CFLAGS += -O3 $(shell $(PKGCONF) --cflags libdpdk)

LDFLAGS_SHARED = $(shell $(PKGCONF) --libs libdpdk)

LDFLAGS_STATIC = -Wl,-Bstatic $(shell $(PKGCONF) --static --libs libdpdk)

build/$(APP)-shared: $(SRCS-y) Makefile $(PC_FILE) | build

$(CC) $(CFLAGS) $(SRCS-y) -o $@ $(LDFLAGS) $(LDFLAGS_SHARED)

build/$(APP)-static: $(SRCS-y) Makefile $(PC_FILE) | build

$(CC) $(CFLAGS) $(SRCS-y) -o $@ $(LDFLAGS) $(LDFLAGS_STATIC)

build:

@mkdir -p $@

.PHONY: clean

clean:

rm -f build/$(APP) build/$(APP)-static build/$(APP)-shared

test -d build && rmdir -p build || true

else

ifeq ($(RTE_SDK),)

$(error "Please define RTE_SDK environment variable")

endif

# Default target, detect a build directory, by looking for a path with a .config

RTE_TARGET ?= $(notdir $(abspath $(dir $(firstword $(wildcard $(RTE_SDK)/*/.config)))))

include $(RTE_SDK)/mk/rte.vars.mk

CFLAGS += -O3

CFLAGS += $(WERROR_FLAGS)

include $(RTE_SDK)/mk/rte.extapp.mk

endif

tianb@ubuntu:~/networks-d/dpdk3$ sudo su

root@ubuntu:/home/tianb/networks-d/dpdk3# make

CC main.o

LD hellodpdk

INSTALL-APP hellodpdk

INSTALL-MAP hellodpdk.map

root@ubuntu:/home/tianb/networks-d/dpdk3# ./build/hellodpdk

EAL: Detected 8 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: No available hugepages reported in hugepages-1048576kB

EAL: Probing VFIO support...

EAL: VFIO support initialized

EAL: PCI device 0000:02:01.0 on NUMA socket -1

EAL: Invalid NUMA socket, default to 0

EAL: probe driver: 8086:100f net_e1000_em

EAL: PCI device 0000:03:00.0 on NUMA socket -1

EAL: Invalid NUMA socket, default to 0

EAL: probe driver: 15ad:7b0 net_vmxnet3

hello dpdk

Run with root privileges

DPDK applications often require root privileges to execute because it needs direct access to hardware resources like NICs and kernel bypass functionality, which typically require elevated permissions. Otherwise, you may see the following output:

tianb@ubuntu:~/networks-d/dpdk3$ ./build/hellodpdk

EAL: Detected 8 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Multi-process socket /run/user/1000/dpdk/rte/mp_socket

EAL: FATAL: Cannot use IOVA as 'PA' since physical addresses are not available

EAL: Cannot use IOVA as 'PA' since physical addresses are not available

EAL: Error - exiting with code: 1

Cause: eal init failed

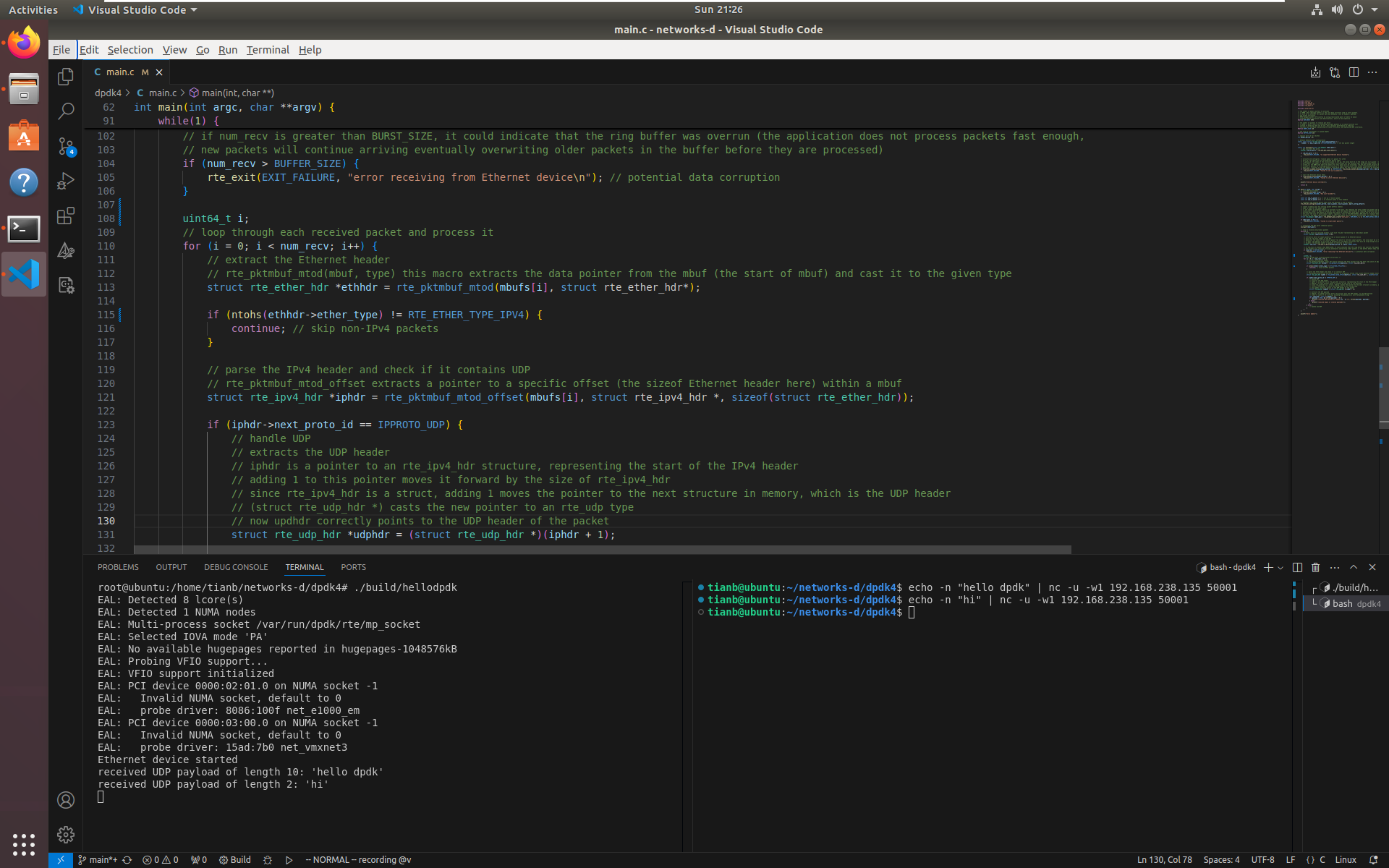

Demo: DPDK & UDP

We will create a DPDK application that receives and processes UDP packets.

Once we bind the NIC ens160 to DPDK and unbind it from the kernel, it will no

longer be managed by the kernel's networking stack. As a result, it will not

show up in ifconfig, and the kernel will not handle IP routing for that

interface. To send UDP packets (or any IP packets) to this NIC, we can manually

set up ARP mapping the IP to its MAC address.

ens160: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.238.135 netmask 255.255.255.0 broadcast 192.168.238.255

inet6 fe80::3ec:13a3:1f2c:74f6 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:33:d5:cf txqueuelen 1000 (Ethernet)

RX packets 119157 bytes 166775204 (166.7 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 37507 bytes 3431719 (3.4 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

# set up ARP for ens160 managed by DPDK

sudo arp -s 192.168.238.135 00:0c:29:33:d5:cf

# send a UDP packet

#

# echo -n <msg>

# by default, echo appends a newline at the end of the output.

# the -n option prevents echo from appending the newline.

#

# nc -u -w1 <ip> <por>

# -u: use UDP

# -w1: set a timeout of 1 second

# <ip>: the destination ip

# <port>: the destination port. it can be any port for this example

echo -n "hello dpdk" | nc -u -w1 192.168.238.135 50001

#include <stdio.h>

#include <rte_eal.h>

#include <rte_ethdev.h>

#include <rte_mbuf.h>

#include <arpa/inet.h>

// the number of memory buffers to allocate

// in DPDK, mbuf (message buffer) is the core data structure used to store packets.

// it acts as a container for packet data and metadata, such as headers, payload,

// and packet attributes.

// DPDK manages packets efficiently by using preallocated pools of mbufs to avoid

// frequent memory allocations and deallocations, which can be expensive.

#define NUM_MBUFS 4096

// the number of packets to receive per burst

// in DPDK, a burst refers to processing multiple packets in a single function call

// instead of handling them one by one. this improves performance by reducing

// function call overhead, maximizing CPU cache efficiency, and optimizing NIC interfaces.

#define BURST_SIZE 128

// the size of ring buffer (rx queue depth)

#define BUFFER_SIZE 128

// logical port id for the NIC

int global_portid = 0;

// default Ethernet port configuration

static const struct rte_eth_conf port_config_default = {

.rxmode = { .max_rx_pkt_len = RTE_ETHER_MAX_LEN } // set max packet length

};

static int init_port(struct rte_mempool *mbuf_pool) {

// get available NIC ports

uint16_t nb_sys_ports = rte_eth_dev_count_avail();

if (nb_sys_ports == 0) {

rte_exit(EXIT_FAILURE, "no supported Ethernet device found\n");

}

// allocate and configure a receive queue (rx queue) for a NIC

// port_id: the identifier of the Ethernet device (NIC)

// rx_queue_id: the index of the receive queue to set up. the value must be in the range [0, nb_rx_queue - 1] previously supplied to rte_eth_dev_configure(). we only have 1 rx queue in this example

// nb_rx_desc: the number of receive descriptors to allocate for the receive ring. each descriptor holds a pointer to a packet buffer (mbuf) where the received packet will be stored

// socket_id: the NUMA node where the memory for this queue will be allocated. rte_eth_dev_socket_id(port_id) return the NUMA socket to which an Ethernet device (NIC) is connecte

// rx_conf: a pointer to a configuration structure of type rte_eth_rxconf that allows fine-grained control over the rx queue

// mbuf_pool: a pointer to the rte_mempool from which memory for the received packets mbufs will be allocated

if (rte_eth_rx_queue_setup(global_portid, 0, BUFFER_SIZE, rte_eth_dev_socket_id(global_portid), NULL, mbuf_pool) < 0) {

rte_exit(EXIT_FAILURE, "failed to set up rx queue\n");

}

// start the Ethernet device (NIC)

if (rte_eth_dev_start(global_portid) < 0) {

rte_exit(EXIT_FAILURE, "failed to start Ethernet device\n");

}

printf("Ethernet device started\n");

return 0;

}

int main(int argc, char **argv) {

// init DPDK environment

if (rte_eal_init(argc, argv) < 0) {

rte_exit(EXIT_FAILURE, "EAL init failed\n");

}

const int num_rx_queues = 1; // set up 1 receive queue

const int num_tx_queues = 0; // no transmit queue in this example

// configure the network device (NIC) with the defined rx and tx queues.

rte_eth_dev_configure(global_portid, num_rx_queues, num_tx_queues, &port_config_default);

// create a memory pool for storing packet buffers (mbufs)

// name: a name for the memory pool

// n: the number of elements (mbufs) to allocate in the pool. this defines the total number of packets the pool can hold

// cache_size: number of mbufs to cache per core. this can improve performance by reducing contention when multiple cores are using the pool

// private_date_size: the amount of private data space to allocate for each mbuf, usually set to 0 if not needed

// elt_size: the size of each element (mbuf). typically, this is RTE_MBUF_DEFUALT_BUF_SIZE for standard Ethernet frames

// socket_id: the NUMA (non-uniform memory access) node where the pool should be allocated. use rte_socket_id() to select the appropriate socket for the local core

struct rte_mempool *mbuf_pool = rte_pktmbuf_pool_create("mbuf_pool", NUM_MBUFS, 0, 0, RTE_MBUF_DEFAULT_BUF_SIZE, rte_socket_id());

if (mbuf_pool == NULL) {

rte_exit(EXIT_FAILURE, "failed to create mbuf pool\n");

}

// initialize the NIC ports (Ethernet ports)

init_port(mbuf_pool);

// loop to receive and process packets

while(1) {

// hold a burst of received packets, with each rte_mbuf representing an individual packet

struct rte_mbuf *mbufs[BURST_SIZE] = {0};

// retrieve a burst of input packets from a receive queue of an Ethernet device

// port_id: the port number of the NIC

// queue_id: the index of the receive queue from which to retrieve input packets. the value must be in the range [0, nb_rx_queue - 1] previously supplied to rte_eth_dev_configure(). we only have one rx queue in this example

// rx_pkts: the address of an array of pointers to *rte_mbuf* structures that must be large enough to store *nb_pkts* pointers in it

// nb_pkts: the maximum number of packets to retrieve in this burst

uint64_t num_recv = rte_eth_rx_burst(global_portid, 0, mbufs, BURST_SIZE);

// if num_recv is greater than BURST_SIZE, it could indicate that the ring buffer was overrun (the application does not process packets fast enough,

// new packets will continue arriving eventually overwriting older packets in the buffer before they are processed)

if (num_recv > BUFFER_SIZE) {

rte_exit(EXIT_FAILURE, "error receiving from Ethernet device\n"); // potential data corruption

}

uint64_t i;

// loop through each received packet and process it

for (i = 0; i < num_recv; i++) {

// extract the Ethernet header

// rte_pktmbuf_mtod(mbuf, type) this macro extracts the data pointer from the mbuf (the start of mbuf) and cast it to the given type

struct rte_ether_hdr *ethhdr = rte_pktmbuf_mtod(mbufs[i], struct rte_ether_hdr*);

if (ntohs(ethhdr->ether_type) != RTE_ETHER_TYPE_IPV4) {

continue; // skip non-IPv4 packets

}

// parse the IPv4 header and check if it contains UDP

// rte_pktmbuf_mtod_offset extracts a pointer to a specific offset (the sizeof Ethernet header here) within a mbuf

struct rte_ipv4_hdr *iphdr = rte_pktmbuf_mtod_offset(mbufs[i], struct rte_ipv4_hdr *, sizeof(struct rte_ether_hdr));

if (iphdr->next_proto_id == IPPROTO_UDP) {

// handle UDP

// extracts the UDP header

// iphdr is a pointer to an rte_ipv4_hdr structure, representing the start of the IPv4 header

// adding 1 to this pointer moves it forward by the size of rte_ipv4_hdr

// since rte_ipv4_hdr is a struct, adding 1 moves the pointer to the next structure in memory, which is the UDP header

// (struct rte_udp_hdr *) casts the new pointer to an rte_udp type

// now updhdr correctly points to the UDP header of the packet

struct rte_udp_hdr *udphdr = (struct rte_udp_hdr *)(iphdr + 1);

// extracts the UDP payload

// adding 1 to udphdr pointer moves the pointer past the UDP header, to the UDP payload

// (char *) casts it to a string, assuming the payload is a null-terminated string

char *payload = (char *)(udphdr + 1);

if (payload != NULL && strlen(payload) > 0) {

printf("received UDP payload of length %zu: '%s'\n", strlen(payload), payload);

} else {

printf("received empty or invalid payload\n");

}

} else {

// handle non-UDP

}

}

}

printf("hello dpdk\n");

}

# Refer to DPDK examples in the source directory

# binary name

APP = hellodpdk

# all source are stored in SRCS-y

SRCS-y := main.c

# Build using pkg-config variables if possible

ifeq ($(shell pkg-config --exists libdpdk && echo 0),0)

all: shared

.PHONY: shared static

shared: build/$(APP)-shared

ln -sf $(APP)-shared build/$(APP)

static: build/$(APP)-static

ln -sf $(APP)-static build/$(APP)

PKGCONF=pkg-config --define-prefix

PC_FILE := $(shell $(PKGCONF) --path libdpdk)

CFLAGS += -O3 $(shell $(PKGCONF) --cflags libdpdk)

LDFLAGS_SHARED = $(shell $(PKGCONF) --libs libdpdk)

LDFLAGS_STATIC = -Wl,-Bstatic $(shell $(PKGCONF) --static --libs libdpdk)

build/$(APP)-shared: $(SRCS-y) Makefile $(PC_FILE) | build

$(CC) $(CFLAGS) $(SRCS-y) -o $@ $(LDFLAGS) $(LDFLAGS_SHARED)

build/$(APP)-static: $(SRCS-y) Makefile $(PC_FILE) | build

$(CC) $(CFLAGS) $(SRCS-y) -o $@ $(LDFLAGS) $(LDFLAGS_STATIC)

build:

@mkdir -p $@

.PHONY: clean

clean:

rm -f build/$(APP) build/$(APP)-static build/$(APP)-shared

test -d build && rmdir -p build || true

else

ifeq ($(RTE_SDK),)

$(error "Please define RTE_SDK environment variable")

endif

# Default target, detect a build directory, by looking for a path with a .config

RTE_TARGET ?= $(notdir $(abspath $(dir $(firstword $(wildcard $(RTE_SDK)/*/.config)))))

include $(RTE_SDK)/mk/rte.vars.mk

CFLAGS += -O3

CFLAGS += $(WERROR_FLAGS)

include $(RTE_SDK)/mk/rte.extapp.mk

endif

root@ubuntu:/home/tianb/networks-d/dpdk4# ./build/hellodpdk

EAL: Detected 8 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: No available hugepages reported in hugepages-1048576kB

EAL: Probing VFIO support...

EAL: VFIO support initialized

EAL: PCI device 0000:02:01.0 on NUMA socket -1

EAL: Invalid NUMA socket, default to 0

EAL: probe driver: 8086:100f net_e1000_em

EAL: PCI device 0000:03:00.0 on NUMA socket -1

EAL: Invalid NUMA socket, default to 0

EAL: probe driver: 15ad:7b0 net_vmxnet3

Ethernet device started

received UDP payload of length 10: 'hello dpdk'

received UDP payload of length 2: 'hi'

To send a UDP response, we need to configure a transmit queue and construct a packet following the protocols.

static int encode_udp_packet(uint8_t *msg, uint8_t *data, uint16_t total_len) {

// ether header

struct rte_ether_hdr *eth = (struct rte_ether_hdr *)msg;

rte_memcpy(eth->d_addr.addr_bytes, global_dmac, RTE_ETHER_ADDR_LEN);

rte_memcpy(eth->s_addr.addr_bytes, global_smac, RTE_ETHER_ADDR_LEN);

eth->ether_type = htons(RTE_ETHER_TYPE_IPV4);

// ip header

struct rte_ipv4_hdr *ip = (struct rte_ipv4_hdr *)(eth + 1);

ip->version_ihl = 0x45; // set the IP version (IPv4) and header length (5 * 4 = 20 bytes)

ip->type_of_service = 0; // default

ip->total_length = htons(total_len - sizeof(struct rte_ether_hdr)); // ip packet length

ip->packet_id = 0;

ip->fragment_offset = 0;

ip->time_to_live = 64; // TTL, the maximum number of hops through routers/gateways

ip->next_proto_id = IPPROTO_UDP;

ip->src_addr = global_sip;

ip->dst_addr = global_dip;

ip->hdr_checksum = 0; // clear it first to avoid uninitialized data influence the checksum

ip->hdr_checksum = rte_ipv4_cksum(ip);

// udp header

struct rte_udp_hdr *udp = (struct rte_udp_hdr *)(ip + 1);

udp->src_port = global_sport;

udp->dst_port = global_dport;

uint16_t udplen = total_len - sizeof(struct rte_ether_hdr) - sizeof(struct rte_ipv4_hdr);

udp->dgram_len = htons(udplen); // udp payload length

// data

rte_memcpy((uint8_t *)(udp + 1), data, udplen);

udp->dgram_cksum = 0; // clear it first

udp->dgram_cksum = rte_ipv4_udptcp_cksum(ip, udp);

return 0;

}

nc can wait for a response if we specify a proper wait time using -w, such as:

Put everything together:

#include <stdio.h>

#include <rte_eal.h>

#include <rte_ethdev.h>

#include <rte_mbuf.h>

#include <arpa/inet.h>

// the number of memory buffers to allocate

// in DPDK, mbuf (message buffer) is the core data structure used to store packets.

// it acts as a container for packet data and metadata, such as headers, payload,

// and packet attributes.

// DPDK manages packets efficiently by using preallocated pools of mbufs to avoid

// frequent memory allocations and deallocations, which can be expensive.

#define NUM_MBUFS 4096

// the number of packets to receive per burst

// in DPDK, a burst refers to processing multiple packets in a single function call

// instead of handling them one by one. this improves performance by reducing

// function call overhead, maximizing CPU cache efficiency, and optimizing NIC interfaces.

#define BURST_SIZE 128

// the size of ring buffer (rx queue depth)

#define RX_BUFFER_SIZE 128

// the size of ring buffer (tx queue depth)

#define TX_BUFFER_SIZE 512

// global variables to store network parameters (a quick way)

uint8_t global_smac[RTE_ETHER_ADDR_LEN]; // the source MAC address

uint8_t global_dmac[RTE_ETHER_ADDR_LEN]; // the destination MAC address

uint32_t global_sip; // the source ip

uint32_t global_dip; // the destination idp

uint16_t global_sport; // the source port

uint16_t global_dport; // the destination port

// logical port id for the NIC

int global_portid = 0;

// default Ethernet port configuration

static const struct rte_eth_conf port_config_default = {

.rxmode = { .max_rx_pkt_len = RTE_ETHER_MAX_LEN } // set max packet length

};

static int init_port(struct rte_mempool *mbuf_pool) {

// get available NIC ports

uint16_t nb_sys_ports = rte_eth_dev_count_avail();

if (nb_sys_ports == 0) {

rte_exit(EXIT_FAILURE, "no supported Ethernet device found\n");

}

// get the information for the specified Ethernet device

struct rte_eth_dev_info dev_info;

rte_eth_dev_info_get(global_portid, &dev_info);

const int num_rx_queues = 1; // set up 1 receive queue

const int num_tx_queues = 1; // set up 1 transmit queue

// configure the network device (NIC) with the defined rx and tx queues.

rte_eth_dev_configure(global_portid, num_rx_queues, num_tx_queues, &port_config_default);

// allocate and configure a receive queue (rx queue) for a NIC

// port_id: the identifier of the Ethernet device (NIC)

// rx_queue_id: the index of the receive queue to set up. the value must be in the range [0, nb_rx_queue - 1] previously supplied to rte_eth_dev_configure(). we only have 1 rx queue in this example

// nb_rx_desc: the number of receive descriptors to allocate for the receive ring. each descriptor holds a pointer to a packet buffer (mbuf) where the received packet will be stored

// socket_id: the NUMA node where the memory for this queue will be allocated. rte_eth_dev_socket_id(port_id) return the NUMA socket to which an Ethernet device (NIC) is connecte

// rx_conf: a pointer to a configuration structure of type rte_eth_rxconf that allows fine-grained control over the rx queue

// mbuf_pool: a pointer to the rte_mempool from which memory for the received packets mbufs will be allocated

if (rte_eth_rx_queue_setup(global_portid, 0, RX_BUFFER_SIZE, rte_eth_dev_socket_id(global_portid), NULL, mbuf_pool) < 0) {

rte_exit(EXIT_FAILURE, "failed to set up rx queue\n");

}

// set up transmit queue (tx queue) configuration

struct rte_eth_txconf txconf = dev_info.default_txconf;

// set the offload parameters for the transmission, using the default rx offloads

txconf.offloads = port_config_default.rxmode.offloads;

if (rte_eth_tx_queue_setup(global_portid, 0, TX_BUFFER_SIZE, rte_eth_dev_socket_id(global_portid), &txconf) < 0) {

rte_exit(EXIT_FAILURE, "failed to set up tx queue\n");

}

// start the Ethernet device (NIC)

if (rte_eth_dev_start(global_portid) < 0) {

rte_exit(EXIT_FAILURE, "failed to start Ethernet device\n");

}

printf("Ethernet device started\n");

return 0;

}

static int encode_udp_packet(uint8_t *msg, uint8_t *data, uint16_t total_len) {

// ether header

struct rte_ether_hdr *eth = (struct rte_ether_hdr *)msg;

rte_memcpy(eth->d_addr.addr_bytes, global_dmac, RTE_ETHER_ADDR_LEN);

rte_memcpy(eth->s_addr.addr_bytes, global_smac, RTE_ETHER_ADDR_LEN);

eth->ether_type = htons(RTE_ETHER_TYPE_IPV4);

// ip header

struct rte_ipv4_hdr *ip = (struct rte_ipv4_hdr *)(eth + 1);

ip->version_ihl = 0x45; // set the IP version (IPv4) and header length (5 * 4 = 20 bytes)

ip->type_of_service = 0; // default

ip->total_length = htons(total_len - sizeof(struct rte_ether_hdr)); // ip packet length

ip->packet_id = 0;

ip->fragment_offset = 0;

ip->time_to_live = 64; // TTL, the maximum number of hops through routers/gateways

ip->next_proto_id = IPPROTO_UDP;

ip->src_addr = global_sip;

ip->dst_addr = global_dip;

ip->hdr_checksum = 0; // clear it first to avoid uninitialized data influence the checksum

ip->hdr_checksum = rte_ipv4_cksum(ip);

// udp header

struct rte_udp_hdr *udp = (struct rte_udp_hdr *)(ip + 1);

udp->src_port = global_sport;

udp->dst_port = global_dport;

uint16_t udplen = total_len - sizeof(struct rte_ether_hdr) - sizeof(struct rte_ipv4_hdr);

udp->dgram_len = htons(udplen); // udp payload length

// data

rte_memcpy((uint8_t *)(udp + 1), data, udplen);

udp->dgram_cksum = 0; // clear it first

udp->dgram_cksum = rte_ipv4_udptcp_cksum(ip, udp);

return 0;

}

int main(int argc, char **argv) {

// init DPDK environment

if (rte_eal_init(argc, argv) < 0) {

rte_exit(EXIT_FAILURE, "EAL init failed\n");

}

// create a memory pool for storing packet buffers (mbufs)

// name: a name for the memory pool

// n: the number of elements (mbufs) to allocate in the pool. this defines the total number of packets the pool can hold

// cache_size: number of mbufs to cache per core. this can improve performance by reducing contention when multiple cores are using the pool

// private_date_size: the amount of private data space to allocate for each mbuf, usually set to 0 if not needed

// elt_size: the size of each element (mbuf). typically, this is RTE_MBUF_DEFUALT_BUF_SIZE for standard Ethernet frames

// socket_id: the NUMA (non-uniform memory access) node where the pool should be allocated. use rte_socket_id() to select the appropriate socket for the local core

struct rte_mempool *mbuf_pool = rte_pktmbuf_pool_create("mbuf_pool", NUM_MBUFS, 0, 0, RTE_MBUF_DEFAULT_BUF_SIZE, rte_socket_id());

if (mbuf_pool == NULL) {

rte_exit(EXIT_FAILURE, "failed to create mbuf pool\n");

}

// initialize the NIC ports (Ethernet ports)

init_port(mbuf_pool);

// loop to receive and process packets

while(1) {

// hold a burst of received packets, with each rte_mbuf representing an individual packet

struct rte_mbuf *mbufs[BURST_SIZE] = {0};

// retrieve a burst of input packets from a receive queue of an Ethernet device

// port_id: the port number of the NIC

// queue_id: the index of the receive queue from which to retrieve input packets. the value must be in the range [0, nb_rx_queue - 1] previously supplied to rte_eth_dev_configure(). we only have one rx queue in this example

// rx_pkts: the address of an array of pointers to *rte_mbuf* structures that must be large enough to store *nb_pkts* pointers in it

// nb_pkts: the maximum number of packets to retrieve in this burst

uint64_t num_recv = rte_eth_rx_burst(global_portid, 0, mbufs, BURST_SIZE);

// if num_recv is greater than RX_BUFFER_SIZE, it could indicate that the ring buffer was overrun (the application does not process packets fast enough,

// new packets will continue arriving eventually overwriting older packets in the buffer before they are processed)

if (num_recv > RX_BUFFER_SIZE) {

rte_exit(EXIT_FAILURE, "error receiving from Ethernet device\n"); // potential data corruption

}

uint64_t i;

// loop through each received packet and process it

for (i = 0; i < num_recv; i++) {

// extract the Ethernet header

// rte_pktmbuf_mtod(mbuf, type) this macro extracts the data pointer from the mbuf (the start of mbuf) and cast it to the given type

struct rte_ether_hdr *ethhdr = rte_pktmbuf_mtod(mbufs[i], struct rte_ether_hdr*);

if (ntohs(ethhdr->ether_type) != RTE_ETHER_TYPE_IPV4) {

continue; // skip non-IPv4 packets

}

// parse the IPv4 header and check if it contains UDP

// rte_pktmbuf_mtod_offset extracts a pointer to a specific offset (the sizeof Ethernet header here) within a mbuf

struct rte_ipv4_hdr *iphdr = rte_pktmbuf_mtod_offset(mbufs[i], struct rte_ipv4_hdr *, sizeof(struct rte_ether_hdr));

if (iphdr->next_proto_id == IPPROTO_UDP) {

// handle UDP

// extracts the UDP header

// iphdr is a pointer to an rte_ipv4_hdr structure, representing the start of the IPv4 header

// adding 1 to this pointer moves it forward by the size of rte_ipv4_hdr

// since rte_ipv4_hdr is a struct, adding 1 moves the pointer to the next structure in memory, which is the UDP header

// (struct rte_udp_hdr *) casts the new pointer to an rte_udp type

// now updhdr correctly points to the UDP header of the packet

struct rte_udp_hdr *udphdr = (struct rte_udp_hdr *)(iphdr + 1);

// extracts the UDP payload

// adding 1 to udphdr pointer moves the pointer past the UDP header, to the UDP payload

// (char *) casts it to a string, assuming the payload is a null-terminated string

char *payload = (char *)(udphdr + 1);

if (payload != NULL && strlen(payload) > 0) {

printf("received UDP payload of length %zu: '%s'\n", strlen(payload), payload);

// prepare to send the udp payload back by reversing source and destination fields

rte_memcpy(global_smac, ethhdr->d_addr.addr_bytes, RTE_ETHER_ADDR_LEN);

rte_memcpy(global_dmac, ethhdr->s_addr.addr_bytes, RTE_ETHER_ADDR_LEN);

rte_memcpy(&global_sip, &iphdr->dst_addr, sizeof(uint32_t));

rte_memcpy(&global_dip, &iphdr->src_addr, sizeof(uint32_t));

rte_memcpy(&global_sport, &udphdr->dst_port, sizeof(uint16_t));

rte_memcpy(&global_dport, &udphdr->src_port, sizeof(uint16_t));

// print out source and destination

struct in_addr addr;

addr.s_addr = iphdr->src_addr;

printf("udp source: %s:%d\n", inet_ntoa(addr), ntohs(udphdr->src_port));

addr.s_addr = iphdr->dst_addr;

printf("udp destination: %s:%d\n", inet_ntoa(addr), ntohs(udphdr->dst_port));

// calculate the udp datagram length and total packet length

uint16_t dgram_len = ntohs(udphdr->dgram_len);

uint16_t total_len = dgram_len + sizeof(struct rte_ipv4_hdr) + sizeof(struct rte_ether_hdr);

// allocate a new mbuf (memory buffer) to store the outgoing packet

struct rte_mbuf *mbuf = rte_pktmbuf_alloc(mbuf_pool);

if (mbuf == NULL) {

rte_exit(EXIT_FAILURE, "failed to allocate mbuf\n");

}

// set the length values for the mbuf

mbuf->pkt_len = total_len; // the total length of the entire buffer

mbuf->data_len = total_len; // the length of the acual data part of the packet. in many cases, it can be the same as pkt_len

// get a pointer to the message buffer in the mbuf

uint8_t *msg = rte_pktmbuf_mtod(mbuf, uint8_t *);

encode_udp_packet(msg, (uint8_t *)payload, total_len);

// send the packet back out through the network

// Transmit a burst of packets from a specified queue on an Ethernet port

// uint16_t rte_eth_tx_burst(

// uint8_t port_id, // The port identifier (the port on which to send the packets)

// uint16_t queue_id, // The queue identifier (specifies the TX queue to use for sending)

// struct rte_mbuf **tx_pkts, // Pointer to an array of pointers to mbufs (packets to send)

// uint16_t nb_pkts // Number of packets to send in the burst

// );

rte_eth_tx_burst(global_portid, 0, &mbuf, 1);

printf("udp sent\n");

} else {

printf("received empty or invalid payload\n");

}

} else {

// handle non-UDP

}

}

}

printf("hello dpdk\n");

}

# Refer to DPDK examples in the source directory

# binary name

APP = hellodpdk

# all source are stored in SRCS-y

SRCS-y := main.c

# Build using pkg-config variables if possible

ifeq ($(shell pkg-config --exists libdpdk && echo 0),0)

all: shared

.PHONY: shared static

shared: build/$(APP)-shared

ln -sf $(APP)-shared build/$(APP)

static: build/$(APP)-static

ln -sf $(APP)-static build/$(APP)

PKGCONF=pkg-config --define-prefix

PC_FILE := $(shell $(PKGCONF) --path libdpdk)

CFLAGS += -O3 $(shell $(PKGCONF) --cflags libdpdk)

LDFLAGS_SHARED = $(shell $(PKGCONF) --libs libdpdk)

LDFLAGS_STATIC = -Wl,-Bstatic $(shell $(PKGCONF) --static --libs libdpdk)

build/$(APP)-shared: $(SRCS-y) Makefile $(PC_FILE) | build

$(CC) $(CFLAGS) $(SRCS-y) -o $@ $(LDFLAGS) $(LDFLAGS_SHARED)

build/$(APP)-static: $(SRCS-y) Makefile $(PC_FILE) | build

$(CC) $(CFLAGS) $(SRCS-y) -o $@ $(LDFLAGS) $(LDFLAGS_STATIC)

build:

@mkdir -p $@

.PHONY: clean

clean:

rm -f build/$(APP) build/$(APP)-static build/$(APP)-shared

test -d build && rmdir -p build || true

else

ifeq ($(RTE_SDK),)

$(error "Please define RTE_SDK environment variable")

endif

# Default target, detect a build directory, by looking for a path with a .config

RTE_TARGET ?= $(notdir $(abspath $(dir $(firstword $(wildcard $(RTE_SDK)/*/.config)))))

include $(RTE_SDK)/mk/rte.vars.mk

CFLAGS += -O3

CFLAGS += $(WERROR_FLAGS)

include $(RTE_SDK)/mk/rte.extapp.mk

endif

Check Hugepage Reservation

If you're getting errors related to HugePage configurations, such as "no mounted

hugetlbfs found for that size", you can check the current HugePage status

using:

cat /proc/meminfo | grep Huge

# this will display relevant HugePage statistics:

# HugePages_Total – Total number of HugePages reserved.

# HugePages_Free – Number of free HugePages available.

# HugePages_Rsvd – Reserved HugePages (allocated but not yet used).

# HugePages_Surp – Surplus HugePages (dynamically allocated beyond reservation).

For example:

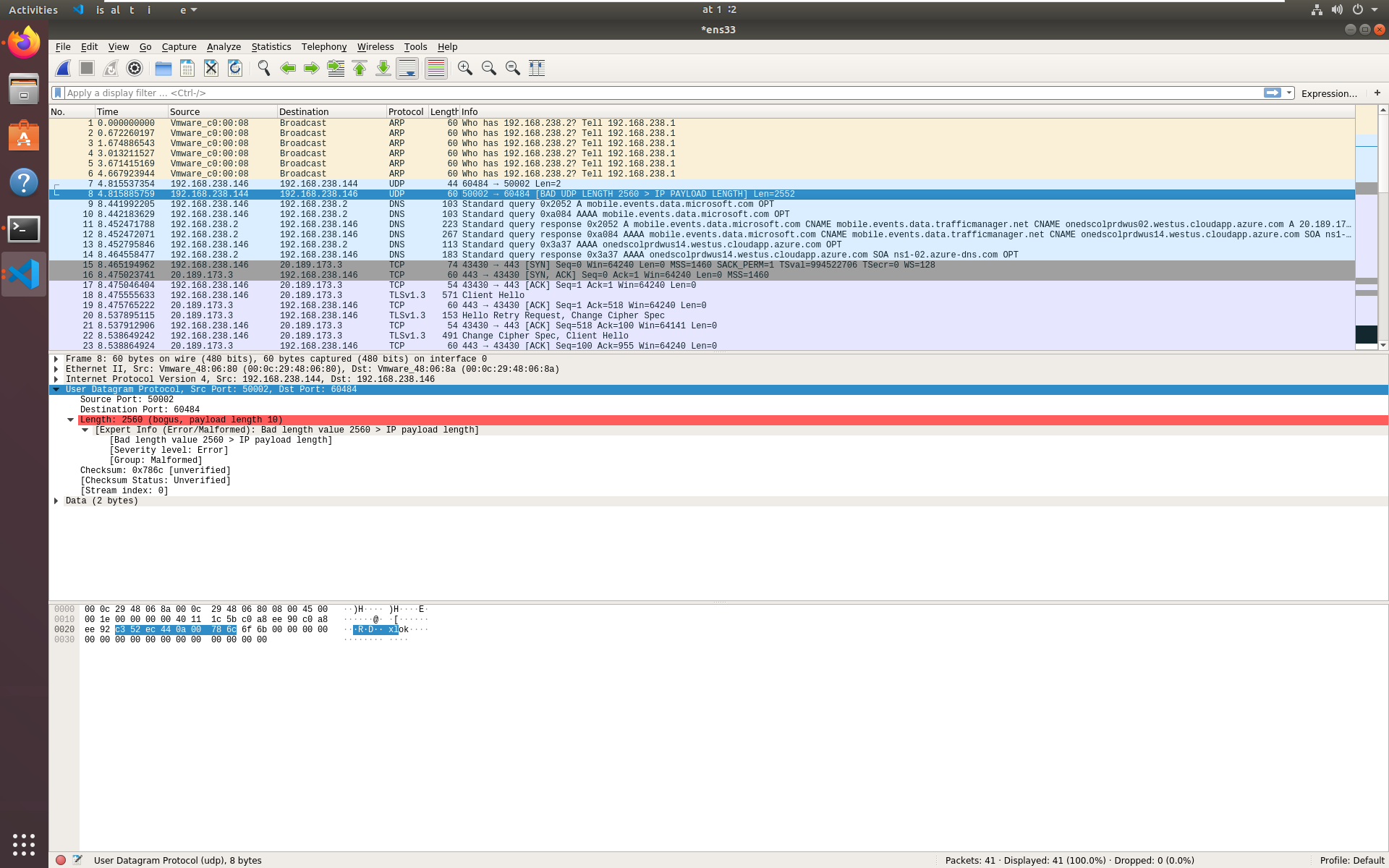

Wireshark is a great tool for inspecting inbound and outbound packets. It can help analyze packet contents, including headers, payload, and encoding.

- Sun Feb 2 9:57 PM, PA

- Sat Feb 8 9:47 PM, PA

-

NIC standards for Network Interface Controller. It's the hardware component that connects a computer or device to a network, allowing it to send and receive data packets over the network. The NIC handles the physical and data link layers (Layer1 and Layer2) of the OSI model, managing communication over wired or wireless connections. In the context of DPDK, the NIC is directly accessed fro high-performance packet processing, bypassing the kernel's networking stack for faster data flow. ↩

-

The networking stack in the kernel, often referred to as the TCP/IP stack, is a set of software layers that handles network communication using different protocols. it includes the link layer (Ethernet, Wi-Fi), the network/internet layer (IP), the transport layer (TCP, UDP), and the application layer (HTTP, DNS, FTP). ↩

-

KNI (Kernel Network Interface) in DPDK is a feature that allows DPDK applications to interact with the kernel’s networking stack when needed. It creates a virtual network interface in the kernel that allows packets to be transferred between the user space (where DPDK operates) and the kernel networking stack. KNI is useful for scenarios where DPDK needs to send or receive packets using kernel-based features, like routing or firewalling, while maintaining the performance benefits of user-space processing. ↩

-

A hugepage is a large memory page size supported by modern CPUs to reduce TLB5 (Translation Lookaside Buffer) lookups and improve memory performance. Standard memory pages are 4KB, while hugepages can be 2MB or even 1GB, reducing overhead in memory management. DPDK requires hugepages because its packet buffers and memory pools rely on large, contiguous memory allocations for high-speed packet processing. While some DPDK configurations may work without hugepages, performance would be significantly degraded due to increased TLB misses, memory fragmentation, and slower DMA6 transfers. ↩

-

TLB is a small cache in the CPU that stores mappings from virtual memory to physical memory. Regular memory pages are 4KB, meaning large memory allocations require many pages, filling up the TLB quickly. Once the TLB is full, new memory accesses cause TLB misses, forcing the CPU to walk the page tables, which slows down packet processing. Hugepages (2MB or 1GB) reduces the number of required pages, decreasing TLB misses and improving performance. ↩

-

DPDK uses DMA (Direct Memory Access) for fast data movement between NICs and memory, bypassing the CPU. Without hugepages, DMA needs to handle many small fragmented pages, increasing the overhead of address lookups. Hugepages provide large contiguous memory blocks, making DMA transfer more efficient and lower latency. ↩

-

Ubuntu 18.04.6 LTS (Bionic Beaver), 64-bit PC (AMD64) desktop image. link. DPDK 19.08 is tested on Ubuntu 16.04, 16.10, 18.04, and 19.04. link I initially tested DPDK 19.08 on Ubuntu 24.04.1 but encountered compilation issues due to the incompatible kernel version. ↩

-

VMware Workstation Pro 17.6.2. ↩

-

DPDK 19.08 docs, release notes. ↩